AI investing, building, and policy, with Air Street's Nathan Benaich

Welcome back to The Form Playbook, our newsletter supporting founders building the future of regulated markets.

This month:

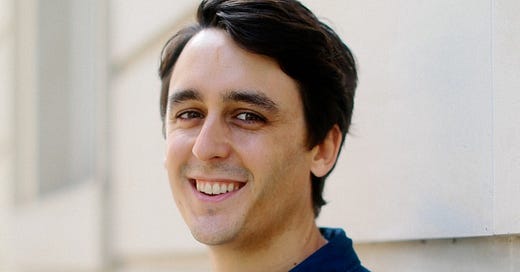

🎙️Interview with Nathan Benaich, GP at Air Street Capital, on the opportunity for AI companies, investing, and policy

📰 News & Views: How AI regulation is shaping the opportunity for AI startups and VCs

⏰ Form Updates: Leo on R&D tax credits and regulators, plus portfolio news highlights

Nathan Benaich, VC, on the opportunity for AI companies, investing and policy

Nathan Benaich is Founder and GP of Air Street Capital, a VC firm investing in AI-first startups. As co-author of the State of AI report, which routinely covers both technical and political developments in AI, and as founder of Spinout.fyi, which campaigns to improve university spinout creation, Nathan has a unique insight into the intersection of AI investing, company-building and regulation. As always, we’ve picked out the key takeaways for founders here, but don’t miss the full interview for insights on founder archetypes, defensible opportunities in AI amid regulatory change and open source development, and the need for more European Dynamism.

Nathan’s Advice for Founders:

The most promising founders need to demonstrate a rare combination of razor-sharp customer insight and technical brilliance. Show you understand both your customer’s pain points and how technology fits into their way of working — even in 2023, not every problem will have a GenAI-shaped solution.

As AI progress accelerates, the most exciting opportunities are likely to lie in ‘full stack’ ML companies — not just licensing out a model, but building a fully-integrated product that solves a problem end-to-end.

The politics and policy of European tech will shape startups’ opportunities: as tech geopolitics intensifies, we will need new defence-focused companies, as researchers lead the way in strategic technologies, we must reform spinout policy, and as AI regulation comes forward, we must avoid a panicked rush to throttle real-world progress.

Tune in tomorrow, Tues 13th to TBI’s AI and the Future of Britain to and find the full interview transcript at the bottom of this email.

News & Views: What AI regulation means for VC

As calls grow for tighter rules on AI, we’ve been thinking through the impact for startups and VC. We’re early, and there will be many more questions to come, but we’re particularly watching 3 issues which will shape the start-up opportunity in AI:

New regulatory regimes will shape unit economics and speed of execution: The EU AI Act is nearly in force, and the UK is wobbling on its initial, sectorally-focused plans which said little about general purpose models. Meanwhile Japan let the text and data-mining cat out the bag, stating that it won’t reinforce copyrights on data used in AI training, just before Adobe — confident in its licensing agreements for model training — said it will indemnify customers who use its genAI tooling against any IP claims. As all this crystallises into regulation, there’s a risk many responsible, narrowly-focused startups get blown off track by regimes designed for bigger companies with large compliance budgets. Regulators, too, rapidly need more funding & expertise to limit the impact of these burdens. For decades, strong regulatory regimes in fintech, health and other sensitive sectors haven’t put off founders going after vast opportunities, but crucially these are mature, well-trodden regulatory paths. As new rules take shape, let’s be mindful of burdens but not at the expense of speed, safety and clarity.

Open source and regulation will set the parameters for defensible business models: Whether companies open source their models is a function of a) their commercial incentives and b) how they trade off AI safety risks against open progress. Meta does not (currently) sell access to its models, and can therefore support an open, collaborative approach to innovation while eating away at others’ moats, as Google has allegedly acknowledged. But in the process Meta has also come under fire from US senators. Expect the feedback loop between open source, responsible deployment and regulatory scrutiny to continue — shaping how and where defensible businesses can be built.

Competition regulators are watching AI infrastructure: The UK’s CMA launched an initial review of foundation models, examining the market’s likely competitive dynamics — again shaped by open source — and the impact on consumers. Despite the initial outcry, it’s legitimate for the competition regulator to develop an early understanding of this transformational industry. But it also follows Ofcom proposing to refer the UK cloud computing market to the CMA for further investigation, after finding practices and market features that were limiting competition. If the AI infrastructure market begins to resemble the cloud market, expect future exits to be scrutinised.

All of this is humming along as the US and UK face elections next year, with legislative windows closing. The UK in particular is jostling to be the home for international AI governance — expect to hear more at the government’s AI Safety conference this Autumn.

Form Updates

Leo was in the New Statesman discussing Rishi Sunak’s hopes for a ‘Unicorn Kingdom’ and spoke at TechUK on the UK’s scale-up problem, highlighting how cuts to R&D tax credits, under-resourced regulators and waning confidence in UK listings are holding back progress

You can also catch Leo on Tues 20th June at the RealTech Conference, hosted by Sam Cash.

Andrew co-hosted another TxP event, the community for folk in tech and policy, on AI tech & geopolitics ft. AI policymakers and investors

Ophelos hosted Break the Cycle, exploring the role for AI to help raise standards in debt resolution, while Sylvera hosted the annual Carbon Markets Summit, featuring global leaders in carbon markets and policy

As always, if you know anyone building the future of regulated markets — or you’re an investor thinking about how policy affects your portfolio, get in touch.

Full Interview with Nathan Benaich:

FORM: What are you looking for from founders building and pitching very early-stage AI companies (unique product / user insight vs technical breakthroughs vs commercial traction)? How exempt are early AI companies from the increasing emphasis in private markets on revenue?

NATHAN BENAICH: For me, the most promising founders have two things. Firstly, razor-sharp insight into their customers — they understand their operating context, their pressures, pain-points and goals on the job, and how new technology would fit into their way of working. Secondly, the right combination of technical brilliance and pragmatism when it comes to selecting the right tools to use or build. This combination of customer centricity and technical pragmatism is rare…even in 2023, not every problem will have a GenAI-shaped solution.

As the speed of AI development increases, where do you see the opportunity for defensible value? What’s changed since you started investing in this area, before the latest craze over generative AI?

The more things change, the more they stay the same. Back in the early days of Air Street, I was arguing that the most exciting opportunities lay in ‘full stack’ machine learning businesses. This means, instead of building part of the stack and licensing it out to someone else to solve a problem, you build a business that creates a fully-integrated ML product that solves the problem end-to-end.

The incredible progress we’ve seen in GenAI only reinforces this. To put it overly-simplistically, a company that licences out a model to help big pharma is probably going to capture less economic upside than one that builds an end-to-end drug discovery platform that owns drug assets.

You also recently had an FT op-ed calling for more European Dynamism, focusing on defence but also touching on tech regulation and procurement. When you pitch LPs on the future of Europe, what’s the response like? Is it hard to make the case, and if so why?

The response to both the European Dynamism piece and my other policy interventions illustrates the problem very well. A large number of civil servants, founders, and fellow investors got in touch privately to express their agreement, but only a fraction felt able to speak out publicly. It seems like there’s a code of silence in the European tech ecosystem: it’s in no one’s short-term interest to admit we’re underperforming, even though it’s in everyone’s long-term interest to fix it. European Dynamism is a theme I’ll definitely be returning to in the future, so you should expect to hear more from me.

Whatever their macro worries about Europe, LPs understand that there are founders with incredible potential if you know where to look. That’s why I think we’ll continue to see the rise of smaller, specialist investors who are able to scout out the most promising early-stage businesses especially now we don’t have the rising tide of low interest rates to lift all boats.

Every year, the State of AI report includes a Politics section, detailing the latest domestic & geopolitical conflicts over AI. What trends stand out to you? How do these macro issues filter down to companies & shape their operating environment?

It’s hard to miss the intensifying US-China technological arms race. The US is unafraid to cannibalise its allies, whether it’s on semiconductors or cleantech, so we can expect to see the push to lure the most promising European across the Atlantic continuing. The firepower the US is placing behind the Inflation Reduction and CHIPS acts should serve as a wake-up call for Europe. Reforming spinout policy and building out AI-native computing infrastructure should be our first starting points.

Relatedly, as we’d started tracking in last year’s State of AI Report, geopolitical tensions are creating opportunities for a new wave of defence tech innovators, beyond established names like Anduril and Palantir. We’re beginning to see welcome signs that the US is making a systematic push to contract with new start-ups, creating the vital market these businesses need. For the founders and investors brave enough to operate in this space, there’s significant potential upside. We now just need Europe to start making moves on a similar scale.

Most recently, we’ve seen the existential risk discussion explode into the mainstream for the first time. While I believe that AI should be developed robustly and reliably, it’s crucial that we avoid a panicked rush to throttle real-world progress in the name of preventing what, for the moment, are entirely hypothetical disasters. If you want to change policy because you believe that we are on the brink of unleashing omnipotent AI that will destroy the world, it’s on you to explain how that’s going to happen.

How does regulation affect the venture opportunity in AI? How much do you care about this and how much thinking do you expect to see from founders about this?

At the moment, the AI-specific regulatory efforts we’re seeing are still some way off being implemented or affecting the kinds of early-stage businesses I back. If you take something like the UK Government’s recent AI White Paper, the direction of travel is positive, but many of the proposals just aren’t concrete enough to shape investment decisions.

I am, however, troubled by the sweeping calls for regulation we’re seeing from some of the biggest labs and hope that policymakers proceed with caution. While I don’t doubt many in these organisations are motivated by genuine ethical concerns, there’s a long history of incumbents with deep pockets and large compliance teams weaponising regulation. The incentive is particularly strong once you’ve acknowledged that open source is one of your biggest competitors.

You’ve been a vocal critic of UK universities’ approach towards spinouts — why is this issue so important to you?

The UK has some of the best universities in the world for science and technology, but last year only 5% of the capital invested into British technology companies went into spinouts. This is a huge wasted opportunity, especially when so many spinouts are working on AI, biotech, quantum, and other strategically important technologies.

While universities point to the same small handful of success stories, they don’t talk about the founders whose businesses never progressed beyond Seed or Series A after they were set up to fail, nor the founders who found the spinout process so adversarial that they simply gave up. Meanwhile, I’ve heard these stories again and again in the 200+ responses to the spinout.fyi survey.

My frustration stems from just how avoidable this is. Instead of approaching the spinout process as a shakedown, there are countries where universities take a pragmatic, long-term view. In the US, technology transfer offices conclude deals in weeks rather than months, while often taking significantly smaller equity stakes. Meanwhile, in Scandinavia, many academics take the option just to buy their university out of the IP and retain full control of their businesses.

Hit reply with follow up questions, suggestions of technology or policy leaders we should interview, or get in touch if you’re building at the frontier of tech and regulation.