Self-driving cars can make philosophy relevant again

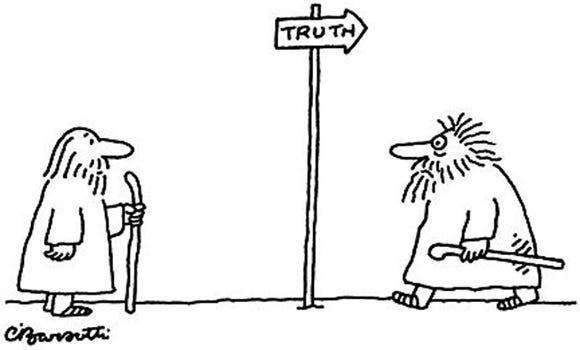

“Philosophy is interesting, but irrelevant”. I’ve heard this a lot. AI, and self-driving cars in particular, could completely change this.

“Philosophy is interesting, but irrelevant”. I’ve heard this a lot. AI, and self-driving cars in particular, could completely change this.

A lot of discussions on ethics focus on codifying our ethical intuitions. Then, debates zone in on extremely unlikely events (“Black Swan” events) that test our models. Something like:

“I think that it is always true that events (a,b, c etc) in set X are morally right”

“But what about Black Swan event d? Event d is rare, but nevertheless a member of set X, and event d seems to be morally wrong. This suggests that not all events in set X are morally right”

The “Trolley Problem” is a famous example of this. These (often bizarre) scenarios test and often undermine our models, but in general they are not seen as practically very important. Why?

I reckon it’s something to do with them being black swan events — by their nature, they are rare. They are so rare that they are statistically extremely unlikely to take place any where near you, so that even if there was a definitive answer, teaching people what that answer is would almost never actually be useful. Who has ever actually had to implement a decision on a Trolley Problem?!

How do self-driving cars change this?

Well, these black swan events should (hopefully) be even rarer compared to today. However, does it follow that finding an “ethical” answer in these cases is unlikely to be useful for any individual? No. What’s going to happen, is that someone (or more likely, a company) is going to be able to hard code a whole fleet of cars on how cars act in exactly these extreme circumstances. Suddenly, we have the power to decide what is the right outcome in a black swan event, and we actually have the power to influence how this whole set of extreme events plays out — which seems pretty important.

So analysing these black swan events is usually counted as irrelevant because it is never likely to be implemented by you, or anyone you know. But now, we’re not talking about a single marginal event, it’s every one of these marginal events – and we’ve got both the power to determine how they play out, and the time to work it out. Enter the philosophers…

So what?

In the long run, I don’t think it will take many ‘black swan’ events, and perverse outcomes, for government to at least try to directly regulate how cars behave in certain circumstances. Which is suddenly going to make deciding the “best outcomes” in these events very important. And for the software companies themselves, any attempt at using effective driving decisions as a competitive advantage might be in vain.